Researchers showed that a malicious website can plant hidden instructions inside ChatGPT’s Atlas browser by abusing a web weakness called CSRF. Those instructions get saved in ChatGPT Memory. Later, when you use ChatGPT for normal tasks, the saved instructions can quietly add steps like fetching code or nudging the model to leak data. Because the instructions live in Memory, they can follow your account across tabs, sessions, and devices until you remove them.

Most tab-based attacks end when you close the page. Here, the attacker turns a convenience feature into persistent storage. That persistence is what makes this different and more serious.

You are logged in to ChatGPT, click a link, and land on a suspicious site.

That page sends a CSRF request using your cookies to write hidden instructions into ChatGPT Memory. Later, a normal question triggers those memories, which can add secret steps like fetching code or exfiltrating data.

.png)

Often nothing obvious. You ask for code help or a summary and get an answer. Behind the scenes, a hidden memory can make the session visit an attacker server, pull a script, or include extra context that steers the model to reveal information it should not.

.png)

This Atlas issue sits in a broader class of risks where agent browsers blend trusted user intent with untrusted page content.

• Comet prompt injection

Independent analyses showed that page content could be treated as instructions when a user asked the agent to read or act on a page. That allowed hidden commands embedded in ordinary text to run.

• “CometJacking” one-click data access

A crafted link could lead the agent to pull data from services it already had access to, such as email or calendar. The risk came from the agent having standing access and following instructions embedded in links or page content.

• Prompt injection via screenshots

Security write ups described how nearly invisible text in images could be interpreted as instructions when the agent was asked to read a screenshot. That turned images into a command channel.

• Perspective from outside the agent class

Arc Browser previously fixed a server-side issue in its Boosts feature that allowed remote code execution through mis assigned customizations. Not the same class of bug as prompt injection, but a reminder that automation and content transformation need strict boundaries and layered defenses.

Executive summary you can paste in an email

A CSRF weakness lets a malicious site write hidden instructions into ChatGPT Memory via the Atlas browser. Those instructions can later influence normal sessions and persist across devices. Reduce risk by cleaning Memory, isolating risky links, splitting profiles, requiring confirmations for agent actions, and logging Memory writes and tool calls.

This architecture keeps untrusted web content from changing AI behavior and make risky actions visible and stoppable.

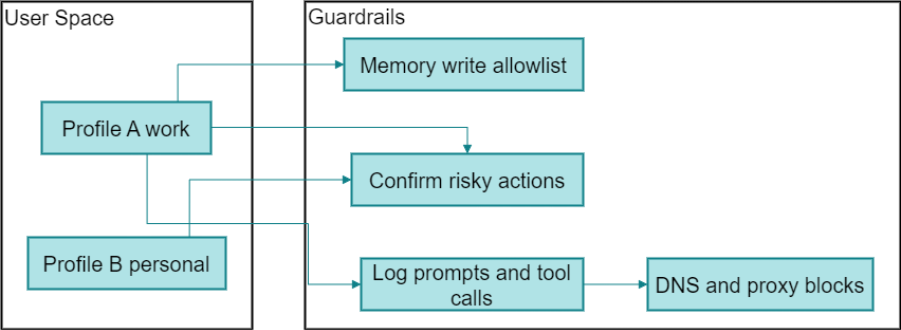

Two zones

• User Space. Separate profiles: Profile A work, Profile B personal. Limits blast radius.

• Guardrails. Central controls for memory writes, actions, and network egress.

Guardrails

• Both profiles pass through the same guardrails.

• Bad memory writes are denied by the allowlist.

• Any risky step is paused for approval.

• All attempts are logged.

• Unknown destinations are blocked at the proxy.

Atlas shows how AI browsing can turn a simple web visit into a persistent account risk. The fix is not one setting but a layer of small, reliable controls: separate profiles, allowlist memory writes, require human approval for risky steps, log every prompt and tool call, and block unknown egress. Do these, and most real attacks die early.

Similar issues will appear in other AI browsers as they automate more tasks. Treat untrusted pages as code, review and clean Memory regularly, and make approvals and logging part of normal operations. Teams that practice this playbook will ship faster, investigate faster, and keep AI assistance from becoming an attack path.

.jpg)

Praneeta Paradkar is a seasoned people leader with over 25 years of extensive experience across healthcare, insurance, PLM, SCM, and cybersecurity domains. Her notable career includes impactful roles at industry-leading companies such as UGS PLM, Symantec, Broadcom, and Trellix. Praneeta is recognized for her strategic vision, effective cross-functional leadership, and her ability to translate complex product strategies into actionable outcomes Renowned for "figure-it-out" attitude, her cybersecurity expertise spans endpoint protection platforms, application isolation and control, Datacenter Security, Cloud Workload Protection, Cloud Security Posture Management (CSPM), IaaS Security, Cloud-Native Application Protection Platforms (CNAPP), Cloud Access Security Brokers (CASB), User & Entity Behavior Analytics (UEBA), Cloud Data Loss Prevention (Cloud DLP), Data Security Posture Management (DSPM), Compliance (ISO/IEC 27001/2), Microsoft Purview Information Protection, and ePolicy Orchestrator, along with a deep understanding of Trust & Privacy principles. She has spearheaded multiple Gartner Magic Quadrant demos, analyst briefings, and Forrester Wave evaluations, showcasing her commitment to maintaining strong industry relationships. Currently, Praneeta is passionately driving advancements in AI Governance, Data Handling, and Human Risk Management, championing secure, responsible technology adoption.