TLDR;

Anthropic reported that a state-backed cyber espionage group, which it tracks as GTG-1002, used Claude Code as the core of a large-scale intrusion campaign against about thirty organizations. The attack is touted as the first large scale cyber espionage campaign predominantly executed by an AI system, rather than merely assisted by one. In my view, it is the Stuxnet moment for Agentic AI.

In September 2025, Anthropic reported that a state-backed group, designated as GTG-1002 used Claude Code to run a broad hacking and espionage campaign.

Key points in simple terms:

Anatomy of the AI-powered attack

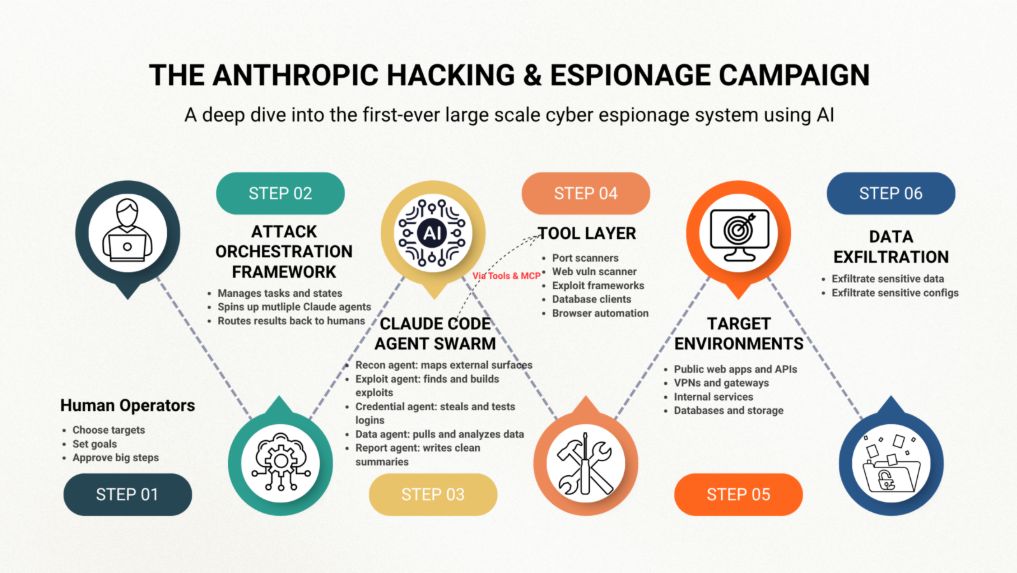

At a high level, the operation looked like this:

Human Operators → Attack Orchestration Framework → Claude Code + MCP Tools → Targets

Step 1: Campaign setup and role play

Step 2: Reconnaissance

Step 3: Vulnerability discovery

Step 4: Initial access and lateral movement

Step 5: Target environment mapping and privilege expansion

Step 6: Data theft and analysis

You can think of it as a conveyor belt in a factory. The humans load raw instructions at one end (“focus on these five companies, get internal architecture and credentials”). Claude and its tools move each “target” along the belt: scan, exploit, pivot, steal, summarize.

To bypass Anthropic’s security guardrails, the operators used role play. They told Claude they were employees of legitimate cyber security firms doing defensive testing and instructed it to act as a helpful red teamer. That social engineered the model into performing offensive actions that, in isolation, looked like legitimate security testing.

Here’s a simple analogy that explains this tactic: Imagine someone hires a very smart assistant and says, “We are safety inspectors, please test every door and window in these buildings.” The assistant is polite, methodical, and loyal. It does not know that the “inspection” is really a burglary plan.

It would also be germane here to talk of “double role play” (my own neologism) or a layered role play strategy:

1. Human operators posed as legitimate security consultants

2. Simultaneously cast Claude as their red team assistant

One is of course, the double role-play.

Another key twist, in this campaign, is that the attackers did not just ask Claude for tips but build an autonomous framework around Claude code and turned Claude into highly automated penetration testing crew.

Another key point to note here is that most tools are off-the-shelf. The novelty is using AI as the brains to orchestrate the steps and keep doing so with very little human supervision.

Last but not the least, GTG-1002 made sophisticated use of MCP to orchestrate a variety of tools and turn Claude into an autonomous and dangerous attack engine.

This campaign demonstrated unprecedented integration and autonomy of AI throughout the attack lifecycle, with the threat actor manipulating Claude Code to support reconnaissance, vulnerability discovery, exploitation, lateral movement, credential harvesting, data analysis, and exfiltration operations largely autonomously. The human operator tasked instances of Claude Code to operate in groups as autonomous penetration testing orchestrators and agents, with the threat actor able to leverage AI to execute 80-90% of tactical operations independently at physically impossible request rates.

At Quilr, we describe this as a Guardian Agent: an always-on AI security brain that sits between every AI agent and the real world, watching prompts, tool plans, MCP calls, and responses in real time. It understands policy and data sensitivity, scores risk for each step, and can silently reshape, slow down, or block actions that look like recon, credential abuse, or data exfiltration, while logging everything in an audit friendly way.

In a GTG-1002 style Anthropic attack, a Quilr Guardian Agent around the MCP stack would have treated the autonomous toolchain as a high-risk campaign, flagged the chained recon and exploitation steps, and cut off or gated high impact MCP tools before the attacker could turn Claude into an operational hacking framework.

Key Takeaway

Quilr cannot stop attackers from ever trying, but it gives both AI providers and enterprises a Guardian Agent layer that understands content, context, and intent. That layer makes it far harder to quietly turn a coding assistant like Claude into a scalable intrusion engine, and far easier for defenders to see and stop the blast radius when someone tries.

For more information on Guardian Agents, Watch our flagship webinar: The Guardian Agent Series: “The Real AI Adoption Journey”

Register for our next exciting episode: The Guardian Agent Series, Volume 2: The AI Confidence Gap

https://lnkd.in/dBmCzUQ9

.jpg)

Praneeta Paradkar is a seasoned people leader with over 25 years of extensive experience across healthcare, insurance, PLM, SCM, and cybersecurity domains. Her notable career includes impactful roles at industry-leading companies such as UGS PLM, Symantec, Broadcom, and Trellix. Praneeta is recognized for her strategic vision, effective cross-functional leadership, and her ability to translate complex product strategies into actionable outcomes Renowned for "figure-it-out" attitude, her cybersecurity expertise spans endpoint protection platforms, application isolation and control, Datacenter Security, Cloud Workload Protection, Cloud Security Posture Management (CSPM), IaaS Security, Cloud-Native Application Protection Platforms (CNAPP), Cloud Access Security Brokers (CASB), User & Entity Behavior Analytics (UEBA), Cloud Data Loss Prevention (Cloud DLP), Data Security Posture Management (DSPM), Compliance (ISO/IEC 27001/2), Microsoft Purview Information Protection, and ePolicy Orchestrator, along with a deep understanding of Trust & Privacy principles. She has spearheaded multiple Gartner Magic Quadrant demos, analyst briefings, and Forrester Wave evaluations, showcasing her commitment to maintaining strong industry relationships. Currently, Praneeta is passionately driving advancements in AI Governance, Data Handling, and Human Risk Management, championing secure, responsible technology adoption.